How to cite it:

Ganney P, 2023, Introduction to Bioinformatics and Clinical Scientific Computing, Boca Raton and London: CRC Press

Some of the material for this book has changed since it was published in early 2023. Also, the figures were all rendered in B&W, whereas colour often made it easier to see what was going on. This page contains those images, together with text that has changed/been added since then. If there's a second edition, then it'll appear in that. If not, then it's here.

All chapters are listed, but only the subsections that have changes are listed under them. Subsections not listed have no changes (yet) therefore.

Of course, copyright material (that I don't have permission to reproduce) and bad jokes still only exist in the lectures, so aren't here. Likewise exercises (and especially the solutions).

Click here to jump to the colour figures.

Example of stack use for variables:

| CODE | VALUE OF b | STACK |

|---|---|---|

| Var b=7; | 7 | Empty |

| Print factorial(b); | 7 | |

| : | ||

| Function factorial(var a) | 7 | |

| { | ||

| Var b=1; | 1 | b=7 |

| While(a>0) | ||

| { | ||

| b=b*a; | 7;42;210;840;2520;5040;5040 | |

| a=a-1; | ||

| } | ||

| } | 7 | Empty |

"is such often used" changed to "are often used"

Let’s go a little further, so we can really understand this concept and the importance of it.

“Multivalued dependency (MVD) deals with complex attribute relationships in which an attribute may have many independent values while yet depending on another attribute or group of attributes. It improves database structure and consistency and is essential for data integrity and database normalization.” (Reference: https://www.geeksforgeeks.org/dbms/multivalued-dependency-mvd-in-dbms/)

MVD means that for a single value of attribute 'a' multiple values of attribute 'b' exist. We write it as a->->b, as before. This is read as “a is multi-valued dependent on b”. Looking at the previous table we can see that Title and Label are multivalued attributes as they have more than one value for a single Artist. So Artist is multi-valued dependent on Title and is also multi-valued dependent on Label so we write it as Artist->->Title, Label, as before (Footnote: There is a fully worked example at https://www.geeksforgeeks.org/dbms/multivalued-dependency-mvd-in-dbms/).

This concept is important as data errors and redundancies may result from Multivalued Dependency. Normalising the database to 4NF gets rid of Multivalued Dependencies and thus this potential issue. An example might be that the Beatles not only released Help! on 2 labels, but did so for several albums, including A Hard Day’s Night, so part of the table may look like:

| Artist | Title | Label |

|---|---|---|

| Beatles | Help! | Parlophone |

| Beatles | Help! | United Artists |

| Beatles | A Hard Day’s Night | Parlophone |

| Beatles | A Hard Day’s Night | United Artists |

Which clearly shows redundancy.

Dimension tables need populating first otherwise you’re trying to reference a dimension that doesn’t yet exist when you populate the fact table. Of course, this population could be done concurrently.

Fact/Dimension tables are essentially lookups/data dictionary; A typical OLAP database resembles a star or snowflake schema with a central fact table that contains measurable events surrounded by dimension tables that contain descriptive attributes for analysis.

New online reference for Mullins (2012): https://www.informit.com/store/database-administration-the-complete-guide-to-dba-practices-9780133012736

If your database engine is PostgreSQL, then it has a very useful ON CONFLICT clause that allows you to manage situations where an insert or update operation would violate a unique constraint, such as a primary key. Instead of throwing an error, you can specify how to handle the conflict.

Correction: in the section on HAVING, "It is similar to the WHERE clause, expect that it allows aggregate functions, whereas WHERE does not.", "expect" should be "except".

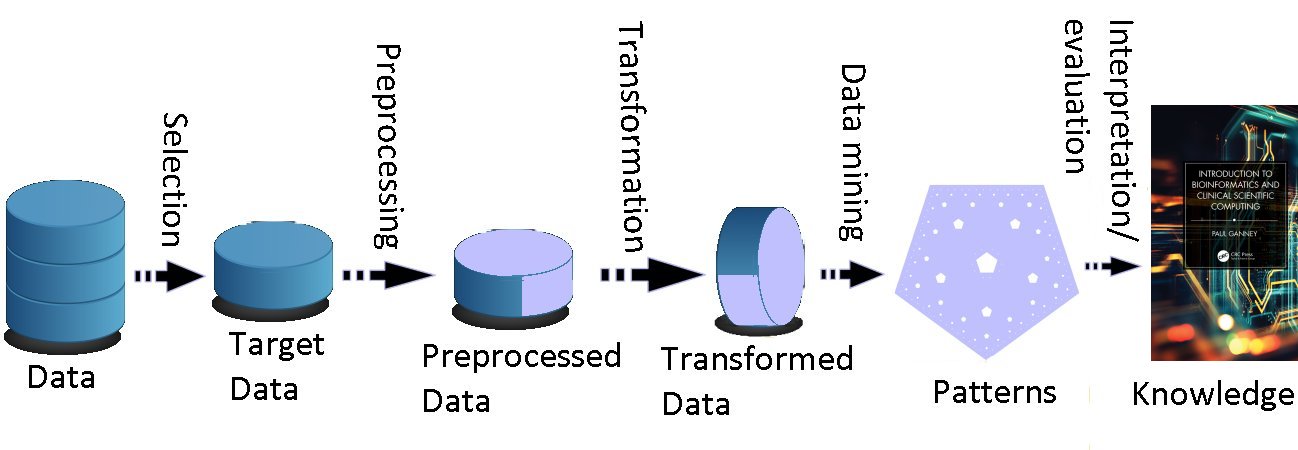

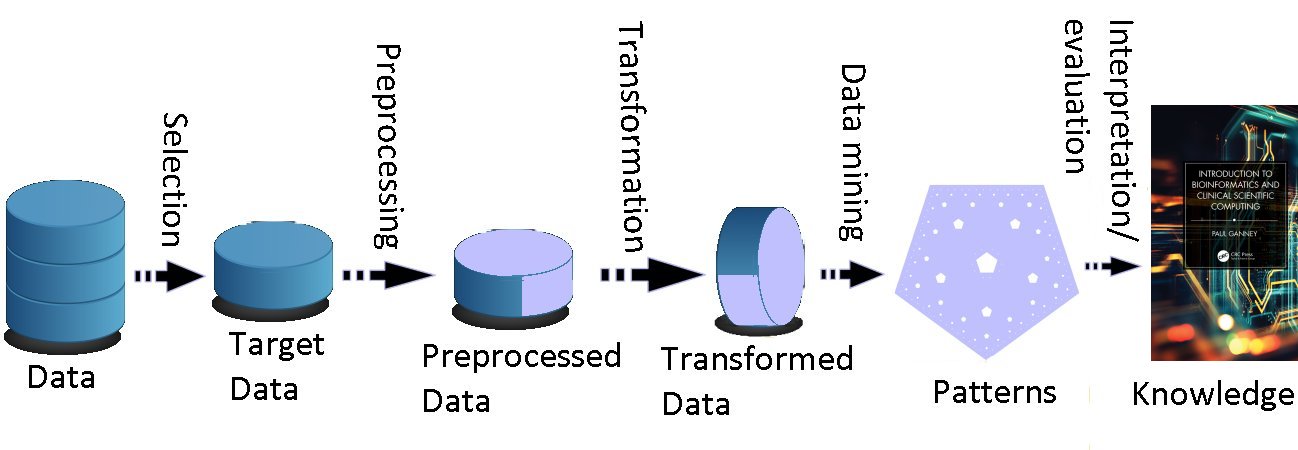

Reference has changed: Fayyad U, Piatetsky-Shapiro G, and Smyth P, 1996, From Data Mining to Knowledge Discovery in Databases. AI Magazine [online] Fall 1996 p.37-54. Available: https://ojs.aaai.org/aimagazine/index.php/aimagazine/article/view/1230 [Accessed 24/06/24]

New KDD figure:

adaptive spline methods (Footnote: Effectively a method of dividing the data into sections (the points at which the data is divided are termed “knots”) and using regression to fit an equation to the data in each section), and projection pursuit regression (Footnote: A statistical model which is an extension of additive models, adapting by first projecting the data matrix of explanatory variables in the optimal direction before applying smoothing functions to these explanatory variables).

Antonio Eleuteri gave this warning: a classifier/pattern recogniser cannot be only validated for discrimination accuracy (i.e. sensitivity, specificity, AUC etc.), but it must be also validated for calibration accuracy (i.e. estimate the bias of the predictions of class membership). You can have a pattern classifier with 100% AUC, which is still useless in practice, because it outputs, say for the case of two classes, 0.51 for class 1, and 0.49 for class 2; this is perfect discrimination, but extremely poor calibration. There is also the issue of reporting the errors of the estimate. Each output must come with a confidence interval, because we need to know how confident the AI system is in the answers that it gives; which means that the user must know what the confidence interval is.

Step 5: (Footnote: We do this by arranging the eigenvalues in numeric order (largest first) and sum them until 90% of the total value is reached. We retain the eigenvectors that correspond to these summed eigenvalues).

There's only one neuron in output layer (Fig 4.16)

In 2022, new reporting guidelines were jointly published in Nature Medicine and the BMJ by Oxford researchers. These will ensure that early studies on using AI to treat real patients will give researchers the information needed to develop AI systems safely and effectively.

References: Vasey B et al, 2022, Reporting guideline for the early-stage clinical evaluation of decision support systems driven by artificial intelligence: DECIDE-AI. Nature Medicine (2022) [online]. Available: DOI: 10.1038/s41591-022-01772-9 [Accessed 03/05/23]

Vasey B et al, 2022, Reporting guideline for the early stage clinical evaluation of decision support systems driven by artificial intelligence: DECIDE-AI. BMJ (2022) [online]. Available: DOI: 10.1136/bmj-2022-070904 [Accessed 24/06/24]

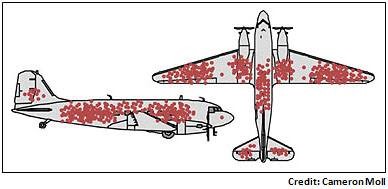

There is a classic case of poor data interpretation from the second world war, involving the placement of armour on aircraft (Footnote: Story adapted from https://johnmjennings.com/an-important-lesson-from-bullet-holes-in-planes/). Armour protects against bullets but it is heavy. Too much and the planes won’t perform well but too little leaves the planes exposed. The US Army (there was no Air Force yet) faced this problem: where should they put armour on bombers in order to reduce casualties?

During WWII the US had a group of mathematicians and statisticians who used statistical analysis to help in the war effort, called the Statistical Research Group (SRG). This question of where should armour be placed on planes was brought to the SRG and was assigned to Abraham Wald, an Austrian Jew who fled the Nazis before WWII and taught maths and statistics at Yale.

The Army gave Wald a lot of useful data — they had recorded the average number of bullet holes per square foot on planes that had come back from bombing missions. The data revealed that there were many bullet holes in the fuselage and wings, but few holes in the engines. The data looked like this:

The Army was planning on adding armour just to the parts of the plane that tended to get most riddled with bullets and they asked Wald to help them determine exactly where the armour should go.

Wald though had a different view. He said that the armour shouldn’t go where the bullet holes are. Instead, it should go where there weren’t any holes. His insight was to realise that when considering all the planes flying missions, the bullet holes should be evenly distributed — there is no reason why anti-aircraft guns fired from thousands of feet away would hit just the wings and fuselage, after all. It is the planes that didn’t return that were presumably hit in the engines and that’s where the armour should go.

Wald’s insight was to identify a logical fallacy called “survivorship bias”, which is the logical error of concentrating on the people or things that made it past some selection process and overlooking those that did not, typically because of their lack of visibility. Survivorship bias is commonly overlooked and can lead to bad decisions. Keeping in mind the bullet hole story is a powerful mental model for making better decisions. There are plenty of examples on the Internet, but this story is a good aide memoire of the problem of focussing only on the survivors and this graphic is a good one to keep in mind when making decisions based on data — question whether the data only focuses on the survivors:

The reference for the Datasaurius Dozen has changed and is now: Matejka J and Fitzmaurice G (2017). Same Stats, Different Graphs: Generating Datasets with Varied Appearance and Identical Statistics through Simulated Annealing, Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems May 2017 Pages 1290–1294 [online] Available: https://dl.acm.org/doi/10.1145/3025453.3025912 [Accessed 15/05/24]

A good illustration of this (Pie charts being hard to read) is the Jastrow Illusion (named after Joseph Jastrow, a psychologist, who discovered it in 1889).

Mighty Optical Illusions. Available: https://moillusions.com/video-jastrow-illusion-in-action/ [Accessed 12/6/25]

Iterative rounding can also cause errors in interpretation: 8.45 might be rounded to 8.5, and then to 9, whereas rounding 8.45 to no decimal places directly would instead give 8.

AND as NAND:

A.B=(A(NAND)B)(NAND)(A(NAND)B)

OR as NAND:

A+B=((A(NAND)A).(B(NAND)B))(NAND)((A(NAND)A).(B(NAND)B)) (as it’s NOT(NOT A AND NOT B), by DeMorgan’s laws).

IP address 948 changed to 148 (twice)

Footnote: Both TLS (Transport Layer Security) and SSL (Secure Socket Layers) are protocols that assist to securely authenticate and transport data on the Internet. Both connect to https. SSL was deprecated in 2015 and replaced by TLS, although phrases such as “SSL certificate” still exist and are valid. TLS can thus be thought of as “the latest version of SSL”.

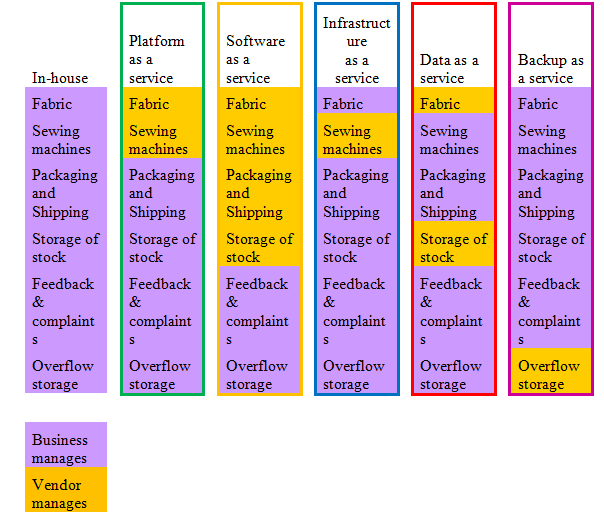

Changed reference: Health Tech Newspaper [online]. Available: https://htn.co.uk/2020/01/27/nhs-digital-moves-national-services-to-the-cloud/ [Accessed 09/07/25]

Updated paragraph: Save9.com used to have a good analysis of the issues (but it’s disappeared now). It was written from a supplier perspective so had a bias towards the advantages but was essentially correct – i.e. you can use cloud services, provided you do it in the right way (compliance with the IG toolkit and the DPA plus a local risk assessment for example).

Faster is of course possible:

| Ethernet Cable | Max Data Transfer Speed | Max Bandwidth | Optimal Cable Length for Max Speed | Common Use Cases |

|---|---|---|---|---|

| Cat5e | 1 Gigabit per second (Gbps) | 100 Megahertz (MHz) | Up to 100 meters | Residential networking, basic office use |

| Cat6 | 10 Gbps | 250 MHz | Up to 55 meters for 10 Gbps, 100 meters for lower speeds | General office networking, some data center applications |

| Cat6a | 10 Gbps | 500 MHz | Up to 100 meters | Advanced office networks, data centers, industrial applications |

| Cat7 | 10 Gbps | 600 MHz | Up to 100 meters | High-speed networks, data centers, server rooms |

| Cat8 | 25-40 Gbps | 2000 MHz | Up to 30 meters | High-performance data centers, server-to-server connections |

Addition to footnote: especially with 802.11be (Wi-Fi 7) (ratified in September 2024) which has a theoretical maximum of 46Gbps

Parsing examples:

“Traditionally, parsing is done by taking a sentence and breaking it down into different parts of speech. The words are placed into distinct grammatical categories, and then the grammatical relationships between the words are identified, allowing the reader to interpret the sentence. For example, take the following sentence:

The man opened the door.

To parse this sentence, we first classify each word by its part of speech: the (article), man (noun), opened (verb), the (article), door (noun). The sentence has only one verb (opened); we can then identify the subject and object of that verb. In this case, since the man is performing the action, the subject is man and the object is door. Because the verb is opened—rather than opens or will open—we know that the sentence is in the past tense, meaning the action described has already occurred. This example is a simple one, but it shows how parsing can be used to illuminate the meaning of a text. Traditional methods of parsing may or may not include sentence diagrams. Such visual aids are sometimes helpful when the sentences being analyzed are especially complex.>/i>" (Reference: ThoughtCo. [online]. Available: https://www.thoughtco.com/parsing-grammar-term-1691583 [Accessed 07/07/25])

This piece of computer code

For(i=0;i<25;i++)

may thus be parsed as:

Command (for)

Parameters (the stuff in brackets)

The parameters may also be parsed further into

Starting condition (i=0)

Stopping condition (i<25)

What to do at the end of each iteration (i++)

The starting condition can then be further parsed into

Variable (i)

Operator (equals)

Value (0)

And so on.

For a further example of parsing, see the DOM model in the chapter on Web Programming.

The world of web services is no longer "new"

Data must be secured at all stages of its lifecycle: collecting, storing, processing, updating and disposal.

In the Vignere example, n has the value 13, not 14.

New reference: https://service-manual.nhs.uk/

The latest standard is HTML 5 and although 5.1 and 5.2 were released, work has coalesced around the Living Standard instead (WHATWG [online]).

There are online references to HTML6 and HTML7 but these don’t really exist and originated in a parody account.

It is, of course, possible to have a great deal of the web site generated by scripts. This is especially useful when the page(s) should display different information depending on the date/time and can prevent having to edit the page frequently in order to provide the correct information. As an example, the web site https://www.tenerifevirtual.church/ uses scripts to insert the correct values into the text in order to display the correct links and themes for that week’s service, which was very useful when I was away for 2 months as all the work could be done in advance and just happen.

One other use of scripts (form the same site) is to move the frequently-altered text to the top of the page and assign them to variables, so that al the required edits are in the same place (as prior to that I had loads of comments such as “THURSDAY” and had to search for them).

And the same thing in a mix of bash and Python…

#!/bin/bash

echo "Content-Type: text/plain"

python greeting.py $(date +"%H") $(date +"%u")

import sys;

hour=int(sys.argv[1]);

weekday=int(sys.argv[2]);

print "Content-type: text/html\n\n";

print "<html>\n<head>\n</head>\n<body>";

if(hour<10):

print "<b>Good morning</b>";

else:

print "Good day!";

print "<p>";

if(weekday==3):

print "Woeful Wednesday";

elif(weekday==5):

print "Finally Friday";

elif(weekday==6):

print "Super Saturday";

elif(weekday==0):

print "Sleepy Sunday";

else:

print "I'm looking forward to this weekend!";

print "</body>\n</html>";

It’s worth noting that as PHP executes server-side the script can contain things like login details to a database, thus not exposing them to the client side (and a potential data breach).

https://www.owasp.org/images/c/c3/Top10PrivacyRisks_IAPP_Summit_2015 is now https://wiki.owasp.org/images/c/c3/Top10PrivacyRisks_IAPP_Summit_2015.

NHS Digital published a set of security standards as a minimum benchmark when developing web services for the NHS, now subsumed into the Data Security Standard. The requirements in the standard are:

• All web services must be Hypertext Transfer Protocol Secure (HTTPs) sites. They must use suitable security protocols and have in-date security certificate. Sites should use Transport Layer Security (TLS) rather than the deprecated Secure Socket Layers (SSL) protocols. TLS needs to be at least 1.2 and higher.

• All sites must have been tested adequately before being deployed. This includes a security penetration test before the website goes live.

• All web pages must be written in valid HTML5. This means that pages should not contain code which is deprecated in HTML5 and should pass validation on the W3C validator.

• All services must meet the AA standard of the Web Content Accessibility Guidelines (WCAG) 2.1. Where possible, the AAA standard should be met.

• All websites must meet the requirements of the General Data Protection Regulation (GDPR) and the Privacy and Electronic Communications Regulations (PECR).

Reference: NHS Digital [online]. Available: https://digital.nhs.uk/cyber-and-data-security/guidance-and-assurance/data-security-and-protection-toolkit-assessment-guides [Accessed 31/08/24]

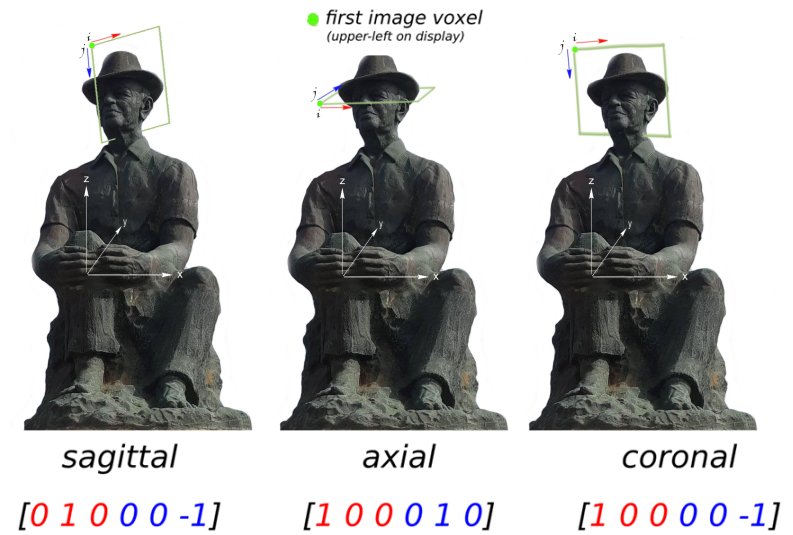

The DICOM reference has changed to: DICOM [online]. Available: http://www.dicomstandard.org/current/ [Accessed 17/05/24]

“standardized” has been changed to “standardised” in the footnote

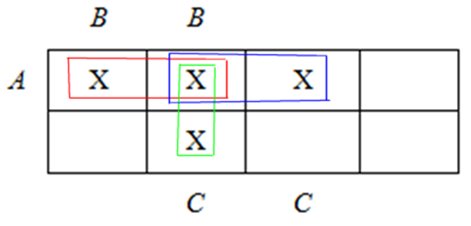

Sentence corrected to read: Of course, each attribute can only appear once in each child dataset as it must be locally unique.

Conversation line 4 corected to: INDEX knows that these objects are stored on CACHE1

Figure 12.13 is corrected to read:

Surname^5^Snail^Forename^5^Brian^Address^16^Magic Roundabout^e-mail^20^brian@roundabout.com^Surname^9^Flowerpot^Forename^4^Bill^ Address^6^Garden^ e-mail^15^ bill@weed.co.uk^EOF

The guidance is currently a pdf with 60 pages of guidance, plus many others of supporting information - the current web site contains an online search engine and the old published retention schedules are no longer available/current.

Footnote to "staff in lone working situations": The Princes Cruise Line issue passengers with medallions that track their whereabouts on the ship. This is useful to passengers (you can find each other when there’s no phone signal – it also unlocks the cabin door as you approach) and bar staff (theoretically you can order a drink and then the medallion tells the staff where to deliver it to, even if you’ve moved - but not if you’re a teenager deliberately running around the ship. It also fails when all they have is a photo of your face and all they can see is the back of your head, e.g. when there’s a show on).

For a simple system though, a batch file copying all files may be sufficient, although only copying the ones that have changed is preferable. For example:

pause assumes backup is h:

set /p dte=enter year for latest file

xcopy d:\ h:\Backup\"Documents and Settings"\paul\"My Documents" /e /d /r /y /h /exclude:medionexclude.txt /D:1/1/%dte%

pause all done

Of course, just having a plan isn’t enough – it needs to keep the business running at a decent level. On the August Bank holiday of 2023, there was a failure in the NATS system that is vital to air traffic control in the UK. (Ref: The Independent [online]. Available: https://www.independent.co.uk/travel/news-and-advice/air-traffic-control-bank-holiday-flights-holiday-cancelled-b2512417.html [Accessed 26/04/24])

More than 700,000 passengers were hit by the failure of the UK’s air-traffic control system on one of the busiest days of the decade. A report has revealed it took 90 minutes for the engineer who was rostered to oversee it to reach work and start to fix the failed system when both the main and back-up computers failed. Why? Because the engineer was at home when it happened. Not their fault, as they were rostered on call so tried all the remote operations. After they failed, the engineer had to travel to the site, which took 90 minutes. The company that built the system weren’t asked for assistance for more than 4 hours after the fault first manifested.

The failure collectively cost airlines tens of millions of pounds. Tim Alderslade, chief executive of Airlines UK, said: “This report contains damning evidence that Nats’ basic resilience planning and procedures were wholly inadequate and fell well below the standard that should be expected for national infrastructure of this importance.”

So the existence of a plan does not necessarily mean that business continuity is assured.

Footnote: Note that, contrary to what you might expect, the pattern in grep does not need to be inside quotation marks.

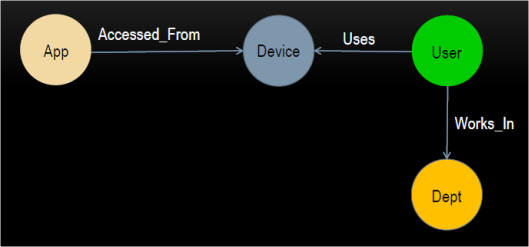

(Only two of these relationships are included in the example diagram, but the others are simple enough to visualise).

In the example above (Figure 15.5), a customer will place an order, using the product options, which will trigger the subsequent events (generate invoice etc.). The chain of triggered events will eventually terminate at a responsible actor, such as the Factory. Note that the customer appears twice – once as initiator and once as receiver.

ERD: In a database project, the ERD is the database schema.

But also remember: “code never lies – comments sometimes do” anonymous

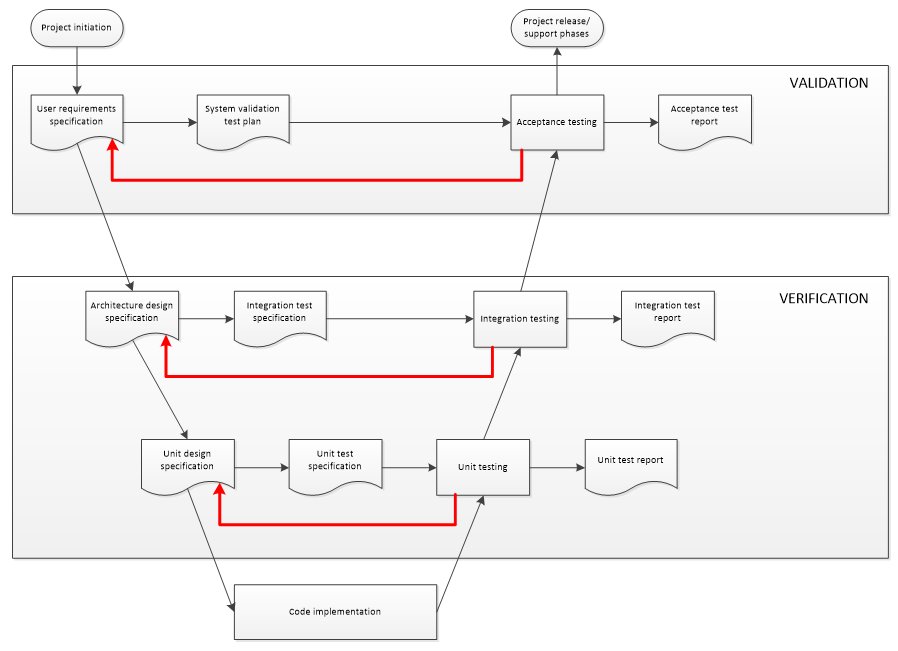

Some examples of software test suites are:

• RapidAPI

• Selenium

• Katalon Studio

• TestComplete (SmartBear)

• LambdaTest

80001-1:2010 corrected to 2021

Great Britain will follow MDD based regulations until 2023 (updated to 2025 in 2023)

The latest guidance is at https://www.gov.uk/guidance/regulating-medical-devices-in-the-uk with a timeline at https://www.gov.uk/government/publications/implementation-of-the-future-regulation-of-medical-devices

On 1/8/23 the UK Government announced that the implementation of the UKCA had been delayed “indefinitely”. “The move has delighted business groups, which had been lobbying hard against the prospect of firms that sell into the much larger EU market having to carry out two separate sets of safety tests, with two separate regulatory bodies”. (Reference: Stewart H, 2023, The Brexit ‘red tape’ illusion has been exposed by the Tories’ CE mark climbdown, The Guardian, 1/8/23.)

The current advice is:

“The Government has introduced measures which provide that CE marked medical devices may be placed on the Great Britain market to the following timelines:

• general medical devices compliant with the EU medical devices directive (EU MDD) or EU active implantable medical devices directive (AIMDD) with a valid declaration and CE marking can be placed on the Great Britain market up until the sooner of expiry of certificate or 30 June 2028

• in vitro diagnostic medical devices (IVDs) compliant with the EU in vitro diagnostic medical devices directive (IVDD) can be placed on the Great Britain market up until the sooner of expiry of certificate or 30 June 2030, and

• general medical devices including custom-made devices, compliant with the EU medical devices regulation (EU MDR) and IVDs compliant with the EU in vitro diagnostic medical devices regulation (EU IVDR) can be placed on the Great Britain market up until 30 June 2030.”

The Government’s response to the 2021 consultation gave reason to believe that there will be an formal 'in-house exemption' from full conformity assessment and UKCA marking for devices made and used within the same health institution, provided certain conditions are met, and these conditions are broadly in-line with those in the EU MDR – meeting relevant essential requirements, developing and manufacturing in-house devices under a QMS , documentation retention, adverse incident reporting etc. The response indicates that there will be a requirement to register in-house devices with MHRA and to register clinical investigations and performance studies. There is a further hint towards the possibility of a more open approach to providing in-house devices from one HI to another, provided this is not for commercial or profitable purposes.

For a very good overview, the book Cosgriff P, Memmott M, 2024, Writing In-House Software in Compliance with EU, UK, and US Regulations. Abingdon: CRC Press is recommended.

The NHS has recognised the importance of regulating AI use in healthcare to ensure that patient safety and privacy are protected. In April 2021, the NHS introduced a new regulatory framework for AI in healthcare called the NHS AI Lab (Reference: NHSX [online], 2023, The NHS AI Lab. Available: https://www.nhsx.nhs.uk/ai-lab/ [Accessed 21/08/24]). This is designed to support the ethical development and safe deployment of AI technologies in healthcare. Additionally, the UK government also established the Centre for Data Ethics and Innovation (CDEI), an independent advisory body that provides guidance and advice on the ethical use of data and AI technologies.

Another initiative to ensure the safety, efficacy, and reliability of machine learning-based medical devices is the Good Machine Learning Practice (GMLP) . It is a set of 10 guiding principles jointly identified by the U.S. Food and Drug Administration (FDA), Health Canada and the UK MHRA. The principles and practices are intended to promote safe, effective and high-quality medical devices that use AI and ML whilst ensuring that the development, testing, and deployment of machine learning-based medical devices are done in accordance with recognised quality standards, and that they are consistent with regulatory requirements.

80001-1:2010 corrected to 2021

Footnote to IEC 82304-1: This covers safety and security of health software products placed on the market without dedicated hardware

This is a process-based standard that aims to help manufacturers of medical devices to design for high usability. It does not apply to clinical decision-making that may be related to the use of the device.

New footnote: Footnote: It doesn’t, however, specify acceptable risk levels